Node Install - Docker Image

Sandfly nodes allow the system to connect to Linux hosts to do agentless investigations and forensic analysis. A minimum of one node needs to be running at all times, however, it is recommended to start multiple node containers for redundancy and performance.

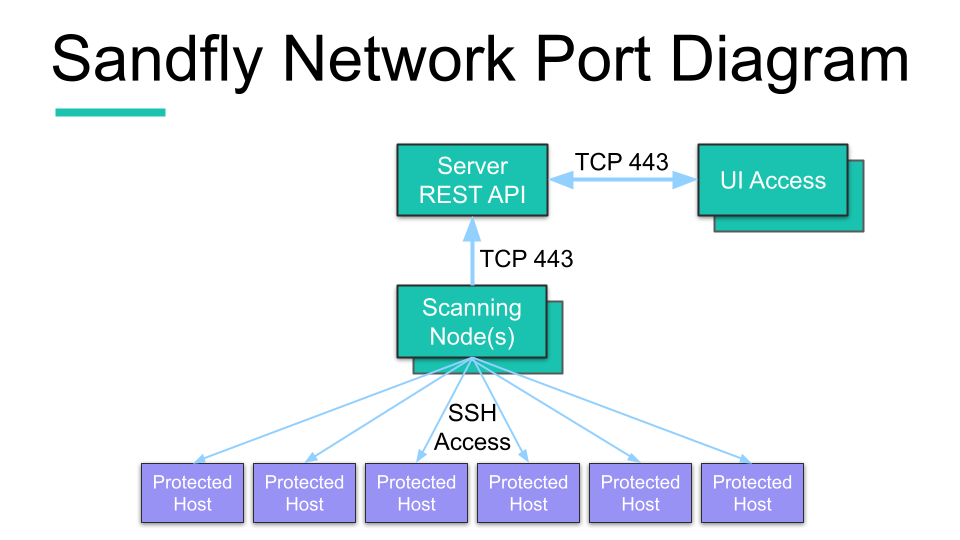

Referring to the diagram below, the nodes are the workhorse of Sandfly. The nodes connect to the protected hosts over SSH to do investigations and report back the results to the server. Each node has 500 threads and can easily scan many times this number of hosts during operation.

Sandfly High-Level Overview

We recommend starting more than one node container for normal operation. Each new node container provides 500 more scanning threads so it is very easy to build massive capability with Sandfly to protect many hosts even in large network deployments. The nodes will connect to the server and handle scanning requests on demand with automatic load balancing. The only action required is to ensure that these nodes have SSH access to the hosts they are intending to protect.

The containers can all run on the same Virtual Machine (VM), but we recommend that this VM not be the same one used to host the server for security reasons. The only limit to how many node containers any given host can run is based on the available RAM and CPU.

The rest of these instructions will get the host ready to run the node containers.

Standard Security vs. Maximum Security Installation

The section on Standard Security vs. Maximum Security installation goes over the differences in how to deploy Sandfly for your environment. If running a very small deployment, or testing the product, it is reasonable to consider using the Standard Security mode. For users with resources to do so, we highly recommend the Maximum Security installation which runs the server and node(s) on separate hosts, be it VMs or bare-metal servers.

I Want to Use the Standard Security Install

If you accept the risks of running the server and scanning node containers on the same host / VM, most of the instructions here can be skipped. In this case, go to the Start the Node section below to start a scanning node on the same system as the server and proceed to log in to begin using Sandfly.

I Want to Use the Maximum Security Install

To use the recommended separate hosts for running the server and scanning nodes, complete all of the steps outlined below.

Download Setup Archive

The setup package, which contains everything needed to install and run Sandfly in any supported environment, is located at Sandfly Security's Github. Go to the link below to obtain the latest version:

https://github.com/sandflysecurity/sandfly-setup/releases🡵

For a checksum verification of the downloaded file, its sha256 hash is provided to the right of the package name.

Download sandfly-setup-5.6.0.tgz onto the host and extract the archive into a desired location. The current directory is used in the example command, however, it technically can be placed in any non-volatile, local path:

wget https://github.com/sandflysecurity/sandfly-setup/releases/download/v5.6.0/sandfly-setup-5.6.0.tgz

tar -xzvf sandfly-setup-5.6.0.tgzOnce the archive has been extracted, there should be a directory named sandfly-setup. This is where all the operations below will take place.

Copy Over Config JSON from the Server to the Node

We now need to copy over the generated node config JSON file from the server. This file is populated with all cryptographic keys and related setup information for the node to automatically connect to the server and operate.

Open two terminal windows; one will need to be connected to the server, and the other to the node. When copying the file use, use scp or any other secure method.

Go to the setup_data directory on the server and copy the configuration text:

# ON SERVER

cd ~/sandfly-setup/setup/setup_data

cat config.node.json

<copy contents>Go to the setup_data directory on the node and paste the configuration text into the file:

# ON NODE

cd ~/sandfly-setup/setup/setup_data

cat > config.node.json

<paste contents>

<CTRL-D>

CAUTION: It Is Possible To Create an Invalid Configuration FileCopy and pasting the text between screens can cause minor changes in the created config.node.json file that will cause an error later in the install process. This situation occurs most often when using the "cat" command. Pasting into the config.node.json file that was opened in a preferred text editor is less likely to cause this issue.

If the paste method is not used, we also recommend validating the JSON structure of the file before proceeding on to the next step. The JSON can be validated with a method of your preference or if python3 is installed it can be quickly checked from the command line:

python3 -mjson.tool "./config.node.json" > /dev/null

The entire config.node.json file must be copied with all keys intact. Most of these values should not be altered unless advised to do so by Sandfly Security.

Delete the Node Config File

Sandfly uses high performance elliptic curve cryptography to secure SSH keys in the server database. To ensure these SSH keys are safe in the event of server compromise, the secret keys used to decrypt them are only stored on the scanning nodes.

Because of the above, we do not want the server to have both public and private keys for the nodes. After the node config JSON is copied onto the node(s), it needs to be removed from the server.

CAUTION: Confirm That a Valid Key Is on a NodeBefore deleting the SSH Key on the server, ensure that there is a valid copy of it on at least one of the nodes. Once the key is deleted it cannot be regenerated or restored. A re-installation of Sandfly would need to be done in order to generate another working key pair.

Go into the server setup_data directory and delete the config.node.json file. The server only needs the config.server.json file present.

If possible, use a secure delete method on the node config file:

# ON SERVER:

shred -u ~/sandfly-setup/setup/setup_data/config.node.jsonOtherwise use the standard delete method:

# ON SERVER:

rm ~/sandfly-setup/setup/setup_data/config.node.json

IMPORTANT: DELETE THE SECRET KEY (Maximum Security Install Only)The node config (config.node.json) must be removed from the server to ensure full security of the SSH credentials within Sandfly. Do not delete this file if using the Standard Security installation.

Once the secret key has been deleted from the server, start the node.

Start the Node

At this point determine how many node containers to start. At least one needs to be running for Sandfly to function.

Start One Node Container

A single instance can be started with the following command:

~/sandfly-setup/start_scripts/start_node.shThe Docker image will be loaded if it does not already exist and the node will start if the keys from above were copied over correctly.

While we generally recommend running multiple node instances, there are a few reasons why you may only want to initially run just one. First, if installing Sandfly for the first time, having only one instance will help with debugging or monitoring as there will only be one container and associated log. Secondly, the host / VM that is running the node does not have sufficient CPU and/or RAM resources. One node is sufficient for initial testing or light use, but eventually it is advisable to run multiple containers for regular use.

Start Additional Containers

Multiple node containers can be started on the same system to get more performance and redundancy by simply running the start_node.sh script repeatedly. Make sure that the host has sufficient RAM to run multiple node containers before doing this.

root@example:~/sandfly-setup/start_scripts# ./start_node.sh

0106c87dbfd304b3f6fef847702a41f603eb5e625c7b6194ba5fd30019533421

root@example:~/sandfly-setup/start_scripts# ./start_node.sh

9ecc25cdaae72589d4792a01989ab73001bcf400da05cfd436a54e9defc38be9

root@example:~/sandfly-setup/start_scripts# ./start_node.sh

a8c3b80228c47a7feabf0dcbee89cbd6a2d5abbe80ec7b2a61fc86ed246bfbd7

TIP: We Recommend Running Multiple ContainersWe recommend running multiple node containers, which can be on a single host instance and/or on individual hosts. Running multiple containers provides much higher performance and redundancy should a container exits unexpectedly.

Each node container runs 500 scanning threads. For each node container added onto the system the scanning capacity will be expanded by 500 threads.

For instance, running 5 nodes provides 2500 scanning threads, potentially allowing it to scan 2500 hosts concurrently. And should a container die unexpectedly, there would still be sufficient capacity for scanning to continue uninterrupted.

Run the following command to see all of the running node containers on a host:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

865c0520124e quay.io/sandfly/sandfly:5.6.0 "/opt/sandfly/start_…" 5 seconds ago Up 3 seconds boring_jang

3b9a82546aae quay.io/sandfly/sandfly:5.6.0 "/opt/sandfly/start_…" 7 seconds ago Up 5 seconds clever_burnell

92b33fe63f33 quay.io/sandfly/sandfly:5.6.0 "/opt/sandfly/start_…" 8 seconds ago Up 6 seconds goofy_blackwell

CAUTION: Node Container RAM and CPUMake sure that the host instance for the node containers has sufficient RAM before running many containers and a couple CPU cores available to ensure that there are no performance issues.

A 4GB instance can run 3 containers comfortably. An 8GB instance can run 6 or 7 node containers. To run many node containers on a single instance, scale up the RAM and CPU accordingly.

The log of the node container can be viewed to make sure it is connected and functioning properly. One way to do this is by finding out what the Docker log is used for output after running the start script above.

Use the docker name or container id of the targeted container to find what unique log name is used for that container instance:

docker inspect 865c0520124e | grep LogPath

"LogPath": "/var/lib/docker/containers/865c0500124e4b119f36447a3556264a3996c5fd78eeee009e7fe10fbbe2e847/865c0500124e4b119f36447a3256264a3996c5fd78eeee009e7fe10fbbe2e847-json.log",With the LogPath file information from the above command, the log can then be viewed. In the example below, the log is displayed via the tail command and its output will be appended as new log entries come in due to the -f option:

tail -f /var/lib/docker/containers/865c0500124e4b119f36447a3556264a3996c5fd78eeee009e7fe10fbbe2e847/865c0500124e4b119f36447a3256264a3996c5fd78eeee009e7fe10fbbe2e847-json.log

{"log":"Setting fallback_directory to /dev/shm\n","stream":"stdout","time":"2024-02-02T15:31:03.680165719Z"}

{"log":"Concurrency set to 500\n","stream":"stdout","time":"2024-02-02T15:31:03.744270095Z"}

{"log":"Simulator multiplier set to 0\n","stream":"stdout","time":"2024-02-02T15:31:03.797034353Z"}

{"log":"Starting Node\n","stream":"stdout","time":"2024-02-02T15:31:03.959266465Z"}

{"log":"{\"time\":\"2024-02-02T15:31:03.964868301Z\",\"level\":\"INFO\",\"msg\":\"starting Sandfly node\",\"version\":\"5.6.0\",\"build_date\":\"2024-01-04T02:44:13Z\"}\n","stream":"stderr","time":"2024-02-02T15:31:03.972896661Z"}

{"log":"{\"time\":\"2024-02-02T15:31:03.964989048Z\",\"level\":\"INFO\",\"msg\":\"loading config file\",\"path\":\"conf/config.json\"}\n","stream":"stderr","time":"2024-02-02T15:31:03.972982467Z"}

{"log":"{\"time\":\"2024-02-02T15:31:04.00731022Z\",\"level\":\"INFO\",\"msg\":\"successfully loaded additional CA certificates from config\"}\n","stream":"stderr","time":"2024-02-02T15:31:04.007616874Z"}

{"log":"{\"time\":\"2024-02-02T15:31:04.007362626Z\",\"level\":\"INFO\",\"msg\":\"node thread limit\",\"threads\":500}\n","stream":"stderr","time":"2024-02-02T15:31:04.008122668Z"}

...Leaving this example command running allows the node messages to continue to scroll by, oftentimes very quickly when scans are occurring. That example is not necessary for normal Sandfly operations, but it can be useful for debugging or monitoring should there be potential errors or performance issues.

Alternatively, use this formatted log viewing method from our FAQ:

How to get a complete log from a Sandfly docker container?

The installation section is almost complete, continue on with the next page.

Updated 22 days ago