Scheduling Optimization

Sandfly scan schedules are easy to add and Sandfly is designed to have a low impact on protected hosts. In certain environments, typically large and/or non-standard ones, there are additional factors that should be considered when scheduling scans.

The primary factor to consider is the effect on shared resources (e.g. SAN devices, NFS servers, VM host machines, etc.). When file and directory sandflies run on many hosts that use the same shared storage, the cumulative burden placed on that common storage device may become noticeable. To minimize the load on shared resources, Sandfly runs schedules in "trickle mode" by default. Instead of scanning all hosts as soon as the schedule start time arrives, Sandfly will spread out the scans across the entire schedule interval time period.

Another important factor to consider is sensitive hosts. These devices could be anything: heavily loaded hosts, lightweight devices with limited resources (e.g. IoT devices, network cameras, etc.), systems providing mission critical services (e.g. a primary database, core routers, etc.), applications on hosts that are affected by even mild extra activity, hosts that have operational requirements that limit scanning periods, or other cases. This subset of hosts may need extra attention for approved security scans.

Sandfly allows you to configure your scan schedules with these factors in mind to ensure a minimum effect on your environment and protected hosts. In general, based on your environment, you will want to define groups, mark those hosts through the use of host tags, and then set up separate schedules with Trickle scanning for the various groupings to have the most optimized schedules. Below we will go into details on how to do this.

Grouping

In general, having schedules for various host groups will greatly aid overall performance. There are two aspects to consider for grouping:

By Sandfly Type

Not all sandflies are equal in terms of on-host impact. File, Directory, and to a much less extent Log and User sandflies access the filesystem, whereas Process sandflies affect the CPU. Therefore, separating schedules by the Sandfly Type is one way to reduce associated resource impact.

- Lower intensity sandfly types (process/user/log) can be scheduled to run more often and/or with larger percentages of sandfly selection. This tier of grouping also includes the active by default Recon sandflies.

- Higher intensity sandfly types (file/dirs) can be scheduled to run less often and/or with smaller percentages of sandfly selection.

Additionally, schedules can include or exclude specific sandflies. If you have hosts that you don't want particular sandflies to run on as frequently, you can exclude the sandflies from more frequent schedules and create a less frequent schedule with those sandflies.

By Host Sensitivity

For sensitive hosts you will likely want to put them in their own, small groups (with tags) and run lighter and/or less frequent scans using host tag specific schedules and exclude the tags from general-purpose schedules.

Grouping Summary

There is no one single or best scheduling scenario; schedule optimization largely depends on the needs of your own unique environment. With that said, the following high-level areas are a reasonable starting point for the division of hosts into groups towards the end goal of scheduling optimization:

- Linux hosts that act as a shared resource to other scanned hosts.

- Linux hosts that are sensitive to scanning and/or have other special access requirements.

- All other Linux hosts, potentially sub-divided by their roles and/or by interleaving host scans that use the same shared resource.

Tagging

Every scan schedule provides the ability to include and/or exclude hosts based on their host tags. This is the heart of how groups of similar hosts can be set per schedule. Therefore, once there is a plan for how to group hosts, the next step is to tag them with one or more host tags that align with the groupings.

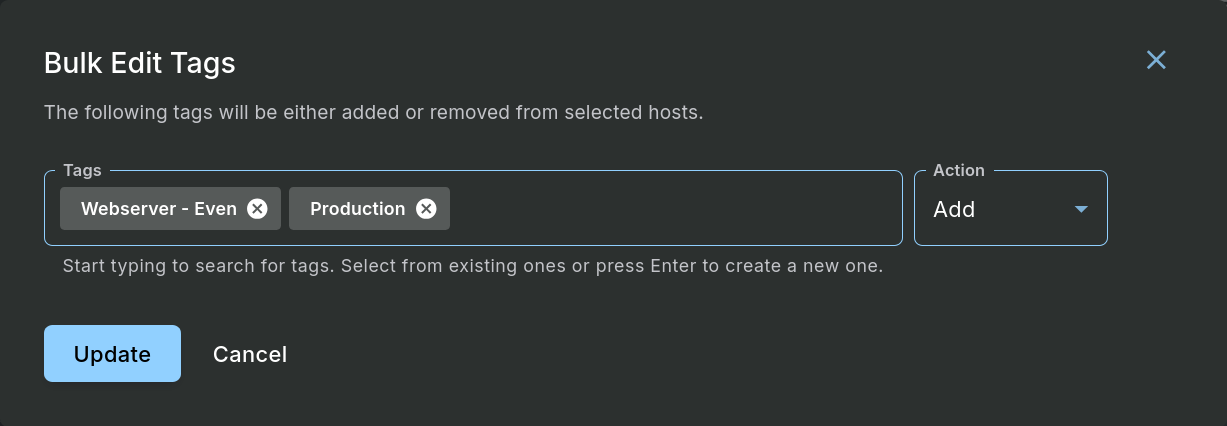

You can tag hosts in bulk on the Hosts Management page via the "Tag" button on the toolbar.

Bulk Edit Tagging via Hosts Management

Schedule

The last step is to put this plan into action by setting up the new schedules.

Please refer to our Adding Schedule - Scan Hosts documentation for details on adding scan schedules via the User Interface (UI).

Schedule Option Considerations

Scan schedules are controlled through the use of these schedule options:

- A lower and upper range of time between scans, which can reduce overlap on shared resources.

- (optional) Add a restricted window of time when the scan runs.

- Grouping of hosts via included and/or excluded tags.

- Sandfly Types, which affects scanned hosts in different ways.

- Percentage of selected Sandflies, the larger the percentage the more to run and process.

- The default Trickle scan mode should be used in most cases to further spread out scanning.

System-managed Schedules

The Drift Detection feature of Sandfly includes options that will automatically create schedules that cover certain hosts. When using the Drift Detection wizard, you have the option of creating gather schedules (usually short-lived schedules for a small number of days, focused on more intensive scanning to provide a complete set of baseline results for model hosts) and scan schedules (long-term schedules that usually exist for the life of the drift profile).

When creating new drift profiles, consider the integration of the system-managed schedules (if selected) with your overall schedule strategy. If you are certain that a drift profile is not performing drift detection on any sandflies that aren't active by default (and therefore can be covered by your existing scheduled scans), consider not selecting the option to create a scan schedule for the drift profile.

The scheduler will not start a new scan against a host that already has a scan running (so schedules won't "stack up" and run simultaneously on a single host), but the system-managed schedules could run scans more frequently on hosts. The system-manged schedules are usually limited in scope to the specific sandflies that are relevant to the types of drift being detected, though, so on most hosts the additional activity from the system-managed schedules is less than a regular full scan.

Updated 30 days ago